AI GPU Cloud

Bare metal GPU clusters and on-demand instances, optimized for distributed AI and HPC workloads.

Solutions

Built for every workload

Train massive AI models, deploy intelligent agents, or run HPC research. Firmus GPU Cloud delivers the compute, networking, and efficiency to accelerate your workloads.

Train LLMs

Multi-GPU clusters with Slurm scheduling and InfiniBand networking for fast, distributed training.

Deploy AI agents

Prototype and run agentic AI with containerized environments, NIM APIs, and GPU acceleration.

Operate ML pipelines

GPU-accelerated environments with observability, versioning, and automation via CLI, Terraform, and GitOps.

Run CUDA & HPC workloads

Direct GPU access for CUDA development, GPU kernel testing, and high-performance scientific computing.

GPU Specs

From containerized workloads to VMs and dedicated bare metal, Firmus GPU Cloud delivers the right GPU performance model for your scale.

Train faster. Scale smarter.

Built for advanced AI and HPC workloads, H200 instances deliver next-generation performance and scalability with liquid-cooled efficiency and full support for distributed training.

8x NVIDIA H200 Tensor Core GPUs (Hopper architecture, HBM3e)

8x 141 GB HBM3e (≈ 1.13 TB total)

NVLink 4.0 + NVSwitch 3.0 (900 GB/s bi-directional per GPU)

2x Intel Xeon Platinum 8462Y+

2 TB DDR5 5600 MT/s ECC

Dual 200–800 Gb/s InfiniBand or 400 Gb/s Ethernet

(RDMA / RoCE v2 support)

Start training, testing, or deploying today with Firmus GPU Cloud

Optimized for AI developers

CUDA-ready environments, Jupyter notebooks, and NIM toolkits

All included out of the box. With Slurm integration, observability, and hybrid cloud connectivity, you get GPU power without setup overhead.

Availability

The latest NVIDIA GPUs, available on-demand or as reserved clusters

Layer onto your workflow

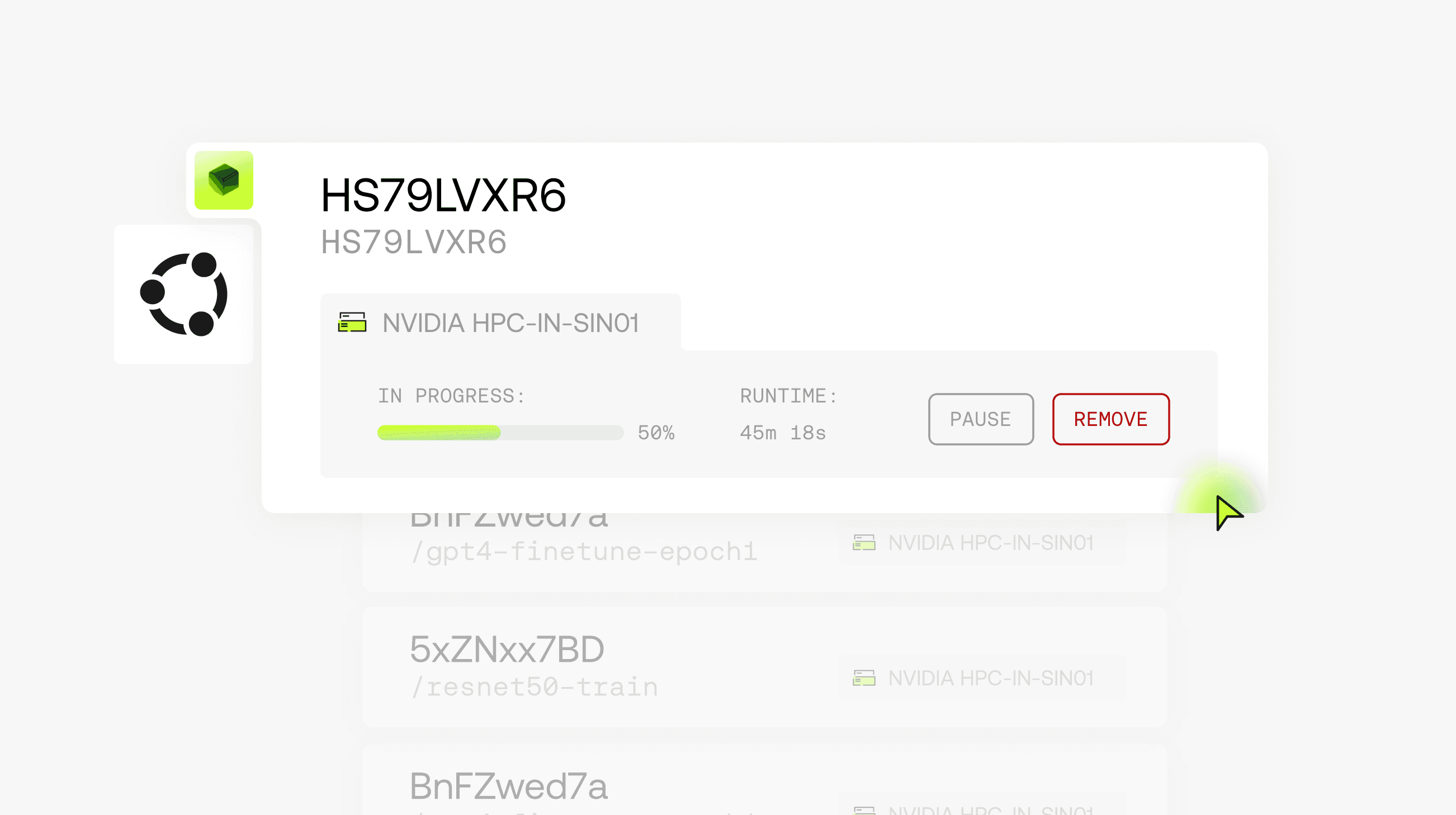

AI WORKBENCH

High-performance: on-demand or reserved instances for AI workloads.

NIM INFERENCE APIS

Deploy AI development environments instantly with NVIDIA NIM.

OBSERVABILITY & MONITORING

Track GPU usage, job performance, and costs with built-in observability tools.

Transparent GPU pricing

Simple, predictable pricing for H100, H200 and GB300 instances. Scale with no surprises.